3D ViewDist System

Large-format 3D projection system with precise computer-controlled viewing distance manipulations

In real-world environments, visual information is rarely limited to a single fixed viewing distance or within the confines of a frontoparallel plane, as it is on a typical computer display.

Horizontal binocular disparity, one of the most extensively studied components of 3D perception, is just one component of the 'Binocular Flow Field' that encompasses the complete optic array & projective geometry afforded by binocular viewing of natural 3D environments (see Cormack, Czuba, Knoll, & Huk, 2017). Physical viewing distance in a 3D environment --defined by binocular convergence in depth-- plays an important role in how binocular retinal projections correspond to real-world space.

To enable precise control of physical viewing distance during 3D visual perception experiments, I developed a completely novel hardware & software solution for precise binocular 3D stimulus presentation & on-the-fly computer-controlled manipulations of physical viewing distance.

The 3D ViewDist System provides:

• Full projective 3D stimulus geometry

• On-the-fly adjustable viewing distance (30–120 cm)

• 1.8 m wide projection screen • 154–73° field-of-view

• Frame-accurate rendering at 120 Hz per eye (240 Hz total)

• Rear-projection passive 3D stereo presentation (≤ 1% binocular crosstalk)

Component details

All components of this system were designed & constructed myself, from scratch.

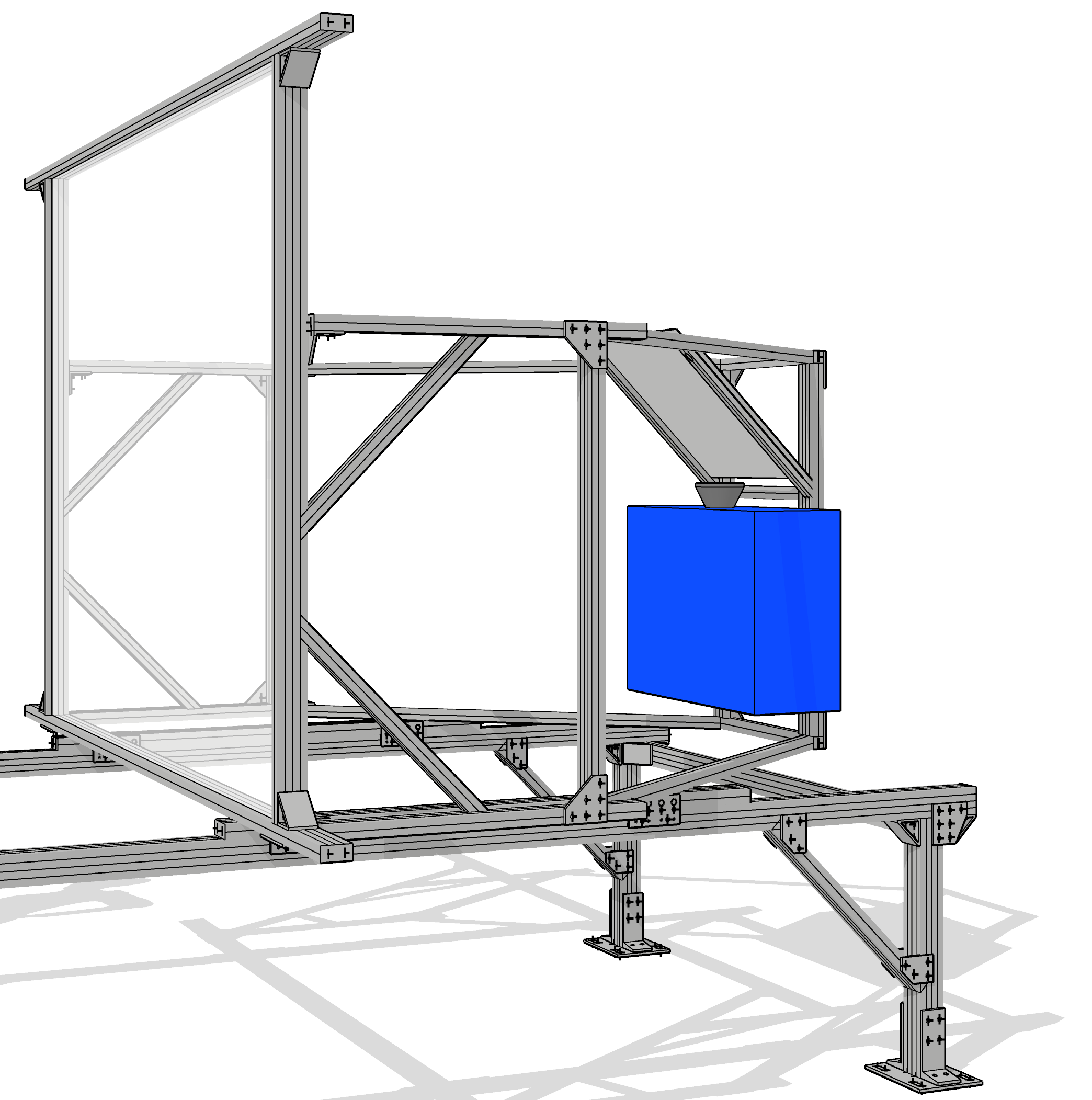

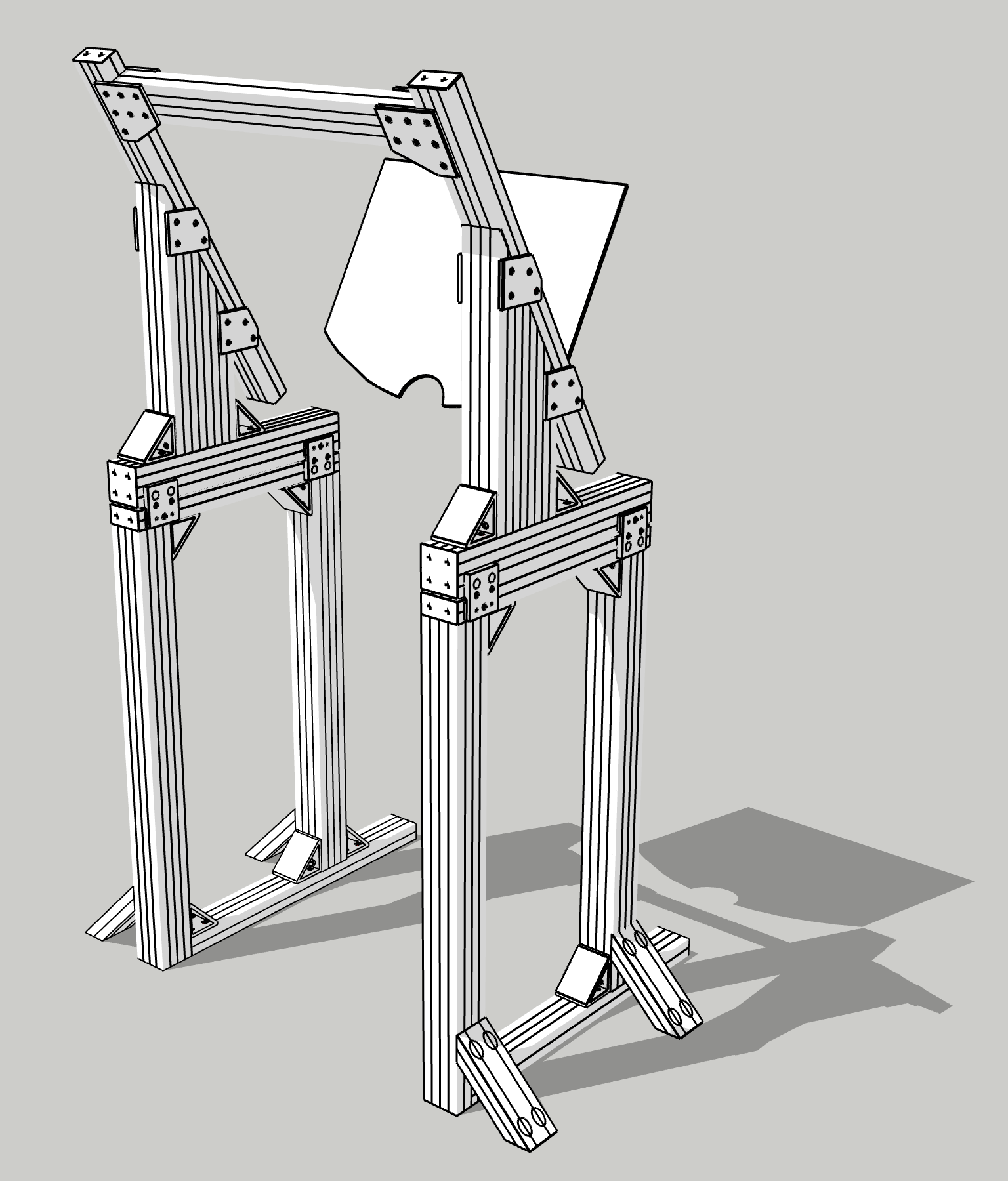

Plans were meticulously crafted in AutoCAD, sufficient bulk 80/20 aluminum extrusions were ordered direct, then cut to size & spec. Two instances of this room-filling installation were built at The University of Texas Austin. One specialized for use during electrophysiology experiments [1], the other specialized for psychophysical experiments [2,3]

Click on each system component card for additional details:

-

[click for more]

The ViewDist's motorized display gantry of allows accurate & precise viewing distance manipulations in a state-of-the-art 3D projection system. By designing the ViewDist system completely custom, purpose-built for 3D vision research, we are able to achieve research-grade precision with maximum flexibility across a wide range of challenging experimental domains (precise 3D presentation, advanced eye tracking, unique subject stabilization & positioning demands).

Frame & Gantry

Structural components were all designed by Thad Czuba from scratch in AutoCAD, from which bulk aluminum extrusions, brackets & hardware could be ordered direct from 80/20 Inc. using their handy "AutoQuoterX" plugin. The gantry was designed specifically to allow fine tuning of the optical path for either maximal display area or maximal pixel density within the narrow specifications of the ProPixx projector's short-throw optics (see section on 3D projection System).

Screen

The ViewDist screen is composed of a rigid acrylic glass projection screen (ST-PRO-DCF spec sheet material from Screen-Tech) suspended within an aluminum extrusion frame via custom 3D printed slides affixed to the screen edge. This material was chosen for maximal optimal image contrast & uniformity (especially important given the tight optical path) while preserving polarization necessary for a passive-3D rear-projection system.

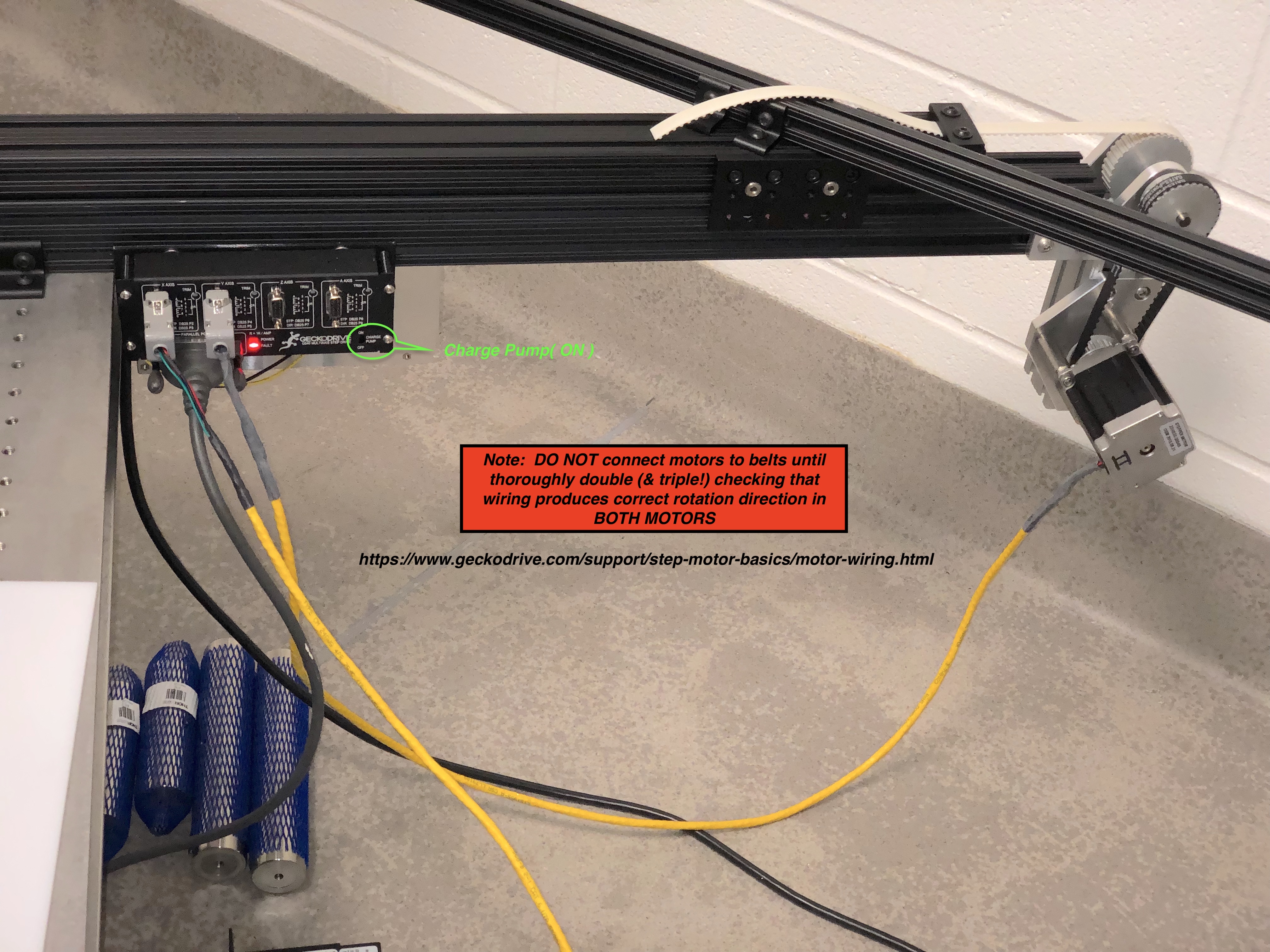

Computer-controlled positioning

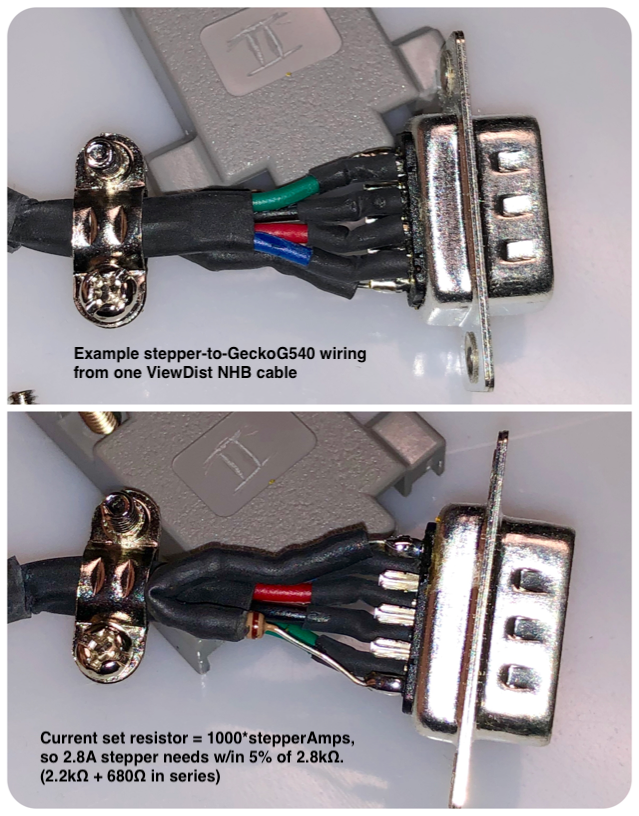

Precise viewing distance manipulations are achieved through a belt-drive* stepper-motor system controlled by adapting an Arduino-based CNC controller (GRBL that receives on-the-fly commands via usb serial port from Matlab (...via our PLDAPS experiment toolbox; see details below). The GRBL-flashed Arduino sends commands to a pair of stepper motors positioned on the distal ends of each gantry track through a Gecko G540 four-axis digital step drive.

(* We found it important to use kevlar-reinforced urethane belts, rather than traditional steel reinforced timing belts for CNC equipemnt, to minimize stretching across the extended belt span without sacrificing positional accuracy; which would have accompanied simply moving to a larger belt)

-

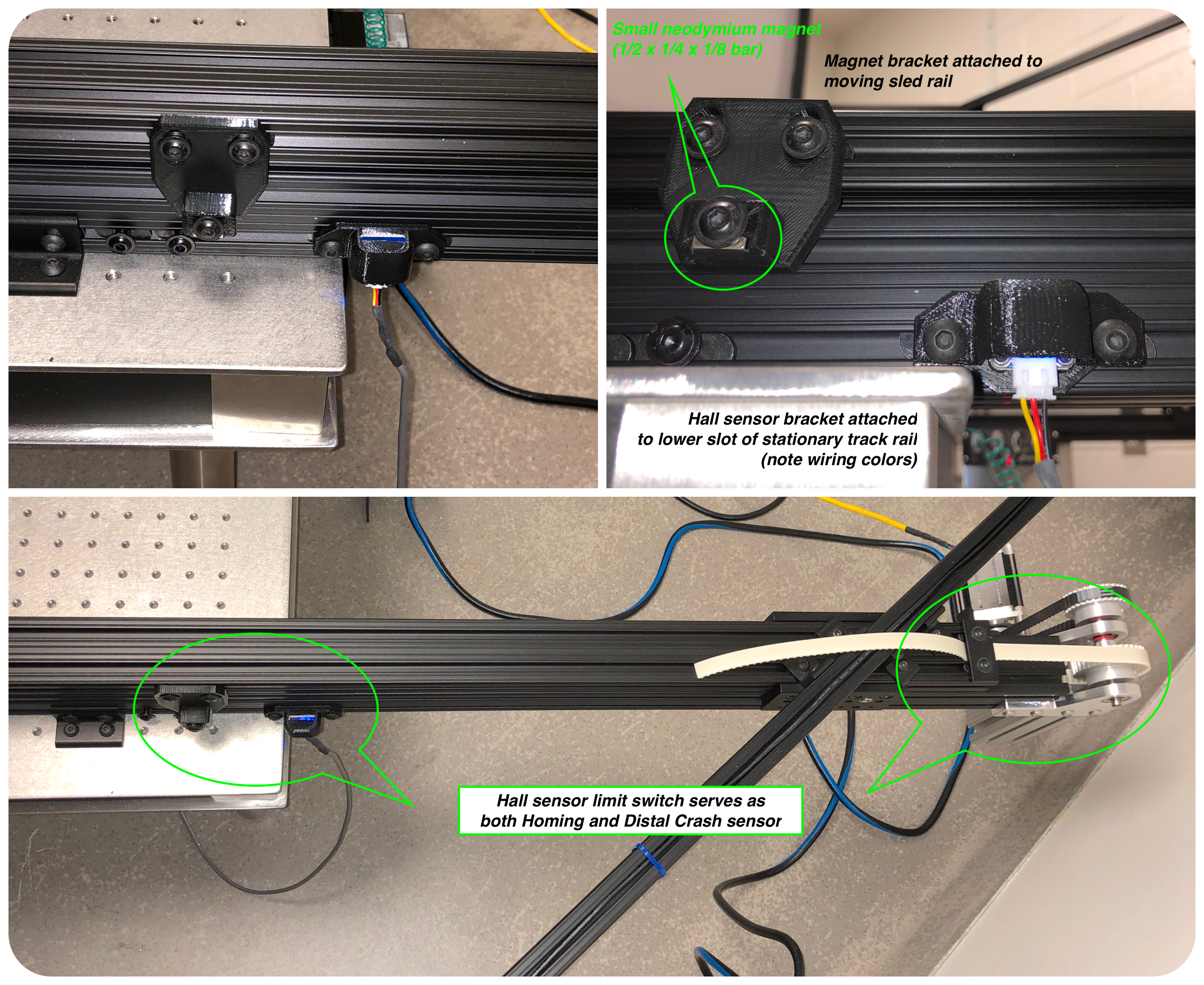

Homing & limit switch configuration:

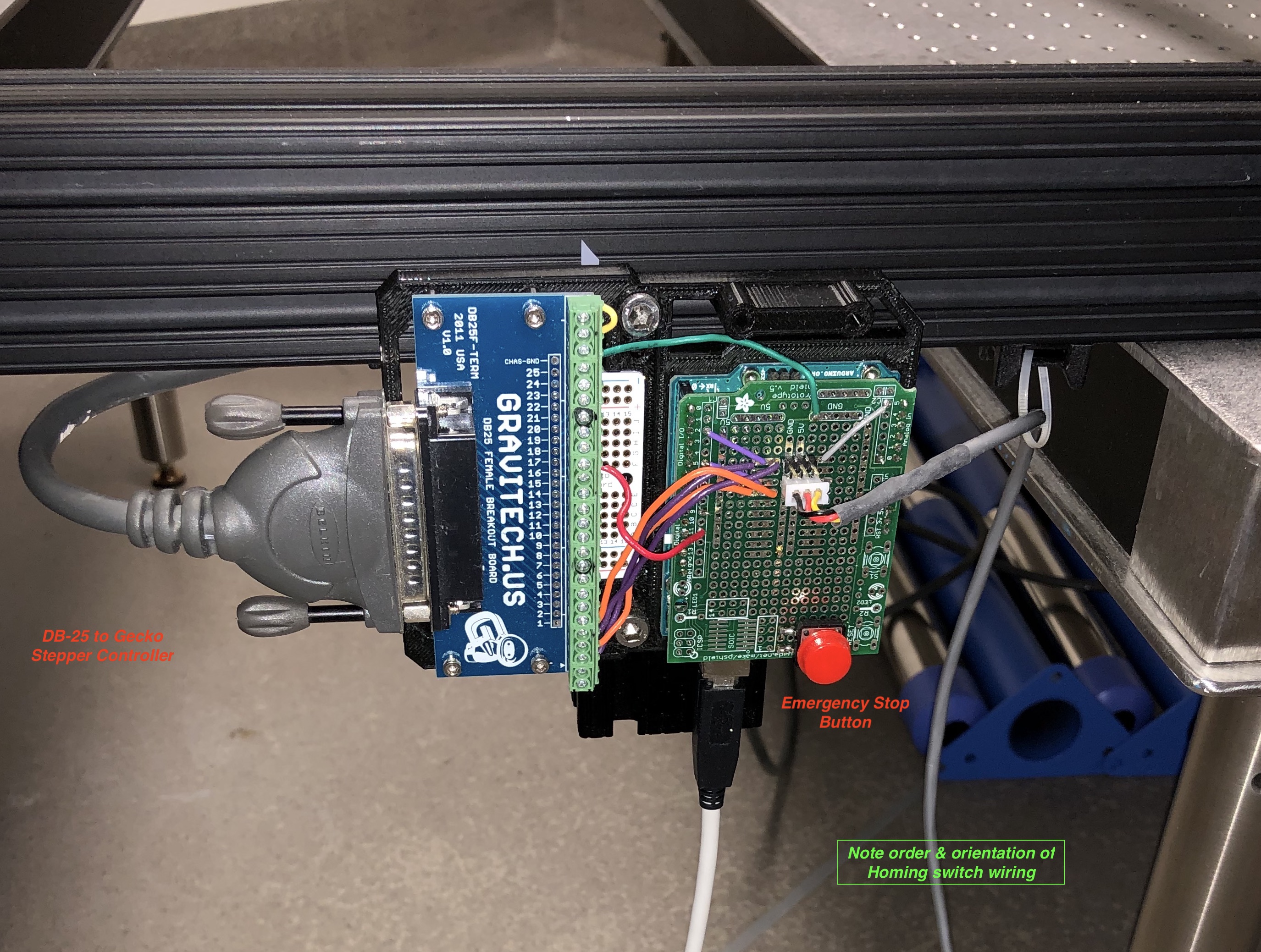

Arduino mount & wiring:

Motor mount configuration:

Stepper motor wiring:

-

-

[click for more]

At the core of the ViewDist system is a ProPixx projector (VPixx Technologies Inc.) with ultra-short throw optics & a DepthQ 3D polarization module (spec sheet) for passive 3D stimulus presentation.

The 'RB3D' mode of the ProPixx provides binocular 3D stimulus presentation at spatial resolution of 1080p and temporal resolution of 120 Hz per eye (240 Hz total). Beyond simple binocular presentation, VPixx' custom RB3D mode utilizes a specialized 'galloping' stereo frame presentation sequence to minimize potential 3d artifacts that can arise from temporally interleaved binocular stimulation (e.g. Pulfrich effect).

(If that sounds like 'greek to you', don't worry; trust that 3D stimulus presentation has been tuned to account for the finest of details. If you DO recognize that potential confound, hi-five to you, check out details of the frame timing buried within the ProPixx demo docs [& hi-five to members of Bruce Cummings' lab for working with Vpixx to develop the specialized timing])

-

[click for more]

Built around an Eyelink 1000 (SR Research) --the research standard in high spatial & temporal resolution eye tracking-- the ViewDist eye tracking arch provides stable, repeatable, and out-of-the-way binocular eye tracking at 1kHz-per-eye without impeding the expansive field of view.

Binocular calibration

(See PLDAPS 4.4.0 revisions on github for implementation details)

Although binocular eye tracking is a feature of this eye tracker model, the default/on-device instantiation of binocular tracking & calibration ends up being something closer to 'bi-monocular tracking with fixed viewing geometry'. While I'm a major proponent of 'let the hardware do it's job & we'll do the rest', the unique requirements of binocular eye tracking across significant changes in physical viewing geometry necessitated a complete refactoring of eye calibration from the Eyelink device/cpu onto the PLDAPS experimental control computer/software.

PLDAPS now independently maps the raw eye position signals from the Eyelink to physical calibration points presented at various physical viewing distances. Using object-oriented components in Matlab, independent geometric transforms can be readily swapped out consistent with any on-the-fly adjustments to ViewDist position changes.

Additional computational demands incurred by shifting additional eye tracking computations onto the stimulus/experimental control machine are mitigated by efficient & flexible OOP implementation within PLAPDS, with the added benefit that all behavioral I/O transformations are completely encompassed within the data structure of each experimental trial.

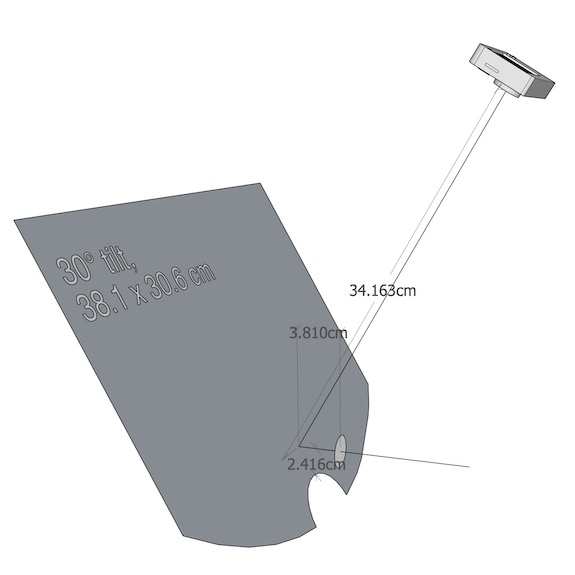

Custom hot mirror

To maximize the unobstructed field of view (FOV), a custom hot mirror was designed to fully encompass the ViewDist display extent at most viewing distances. To further minimize interference at close viewing distances (e.g. 30-40cm; within peripersonal space), a tighter 30° mirror (60° optical path) was chosen over the typical 45° configuration (...another benefit of the fully custom design).

Lateral supports of the eye tracker arch are mounted on linear bearing slides to allow fine-tuned positioning of the mirror fore & aft, and the ability to slide the entire apparatus 8–10" forward; out of the way during setup of electrophysiology devices

-

PLDAPS Toolbox

PLexon DAtapixx PSychtoolbox (PLDAPS) is an advanced neurophysiology & psychophysics experiment toolbox for MATLAB.

Designed in-house, all 3D stimulus applications of PLDAPS designed & written by Thaddeus Czuba

Coded in Matlab, the PLDAPS toolbox provides a robust software framework for neuroscience experimental design & control through an extensive array of experimental hardware for behavioral I/O, neural signal acquisition, eye tracking, and 3D visual stimulus presentation at high frame-rates & precision.

Newest PLDAPS developments (available from my github repo) incorporate object-oriented coding of display & ViewDist positioning components to seamlessly synchronize viewing distance dependencies (i.e. binocular eye tracking calibration, conversions between visual & physical frames of reference [pix-per-deg, deg-per-cm], stepper motor [re]positioning,...etc).

Related publications:

1. Czuba, T.B., Cormack, L.K., & Huk, A.C. (2019). Functional architecture and mechanisms for 3D direction and distance in middle temporal visual area. Vision Sciences Society 2019 Annual Meeting (Demos, PDF)

2. Whritner, J. A., Czuba, T. B., Cormack, L.K., Huk, A.C. (2021). Spatiotemporal integration of isolated binocular 3D motion cues. Journal of Vision, 21(10):2, 1–12. (PDF)

3. Bonnen, K., Czuba, T. B., Whritner, J. A., Kohn, A., Huk, A. C., & Cormack, L. K. (2020). Binocular viewing geometry shapes the neural representation of the dynamic three-dimensional environment. Nature Neuroscience, 23(1), 113–121. (PDF)